ML Insights API in 10 minutes¶

This is a short and quick introduction to the ML Insights API for new users. ML Insights’s goal is to provide data and ML monitoring on any volume of data easily and let users customize almost all aspects of the framework to suit their business needs. ML Insights strives to be easy to use, while providing support for most aspects of the overall ML Observability use cases.

This tutorial covers the notebook experience on the library level APIs only. For a docker-based default application, and its set up, there will be another section.

The intended audience consists of, but is not limited, to:

Data scientists who need to quickly evaluate their data to decide on their ML monitoring use cases.

ML Engineers who need to set up long running monitoring process to continuously evaluate their models and data.

ISV teams who need to collate data for their model across their ML model fleet.

Setup¶

The first step will be to pull the library using pip install and install it locally

pip install oracle-ml-insights

Imports¶

First, import a toy data set to be evaluated for the tutorial:

from sklearn.datasets import load_iris

import pandas as pd

Next, import the required ML Insights components:

from mlm_insights.builder.insights_builder import InsightsBuilder

from mlm_insights.constants.types import FeatureType, DataType, VariableType

Note

In the previous code, we’re importing Insights Builder. We can pass on the reference to all components to the builder. Building the builder then returns the workflow component runner.

The reader notes extra imports, like feature type, which are supporting types for the schema. Details on these components can be read in the Getting Started section.

Load Data and Schema¶

Next, load the data and store it in a data frame.

iris_dataset = load_iris(as_frame=True)

iris_data_frame = pd.DataFrame(data=iris_dataset.data, columns=iris_dataset.feature_names)

Extract the schema for the previous data set to be passed on to the builder. Automatic schema detection is a future option.

def get_input_schema():

return {

"sepal length (cm)": FeatureType(data_type=DataType.FLOAT, variable_type=VariableType.CONTINUOUS),

"sepal width (cm)": FeatureType(data_type=DataType.FLOAT, variable_type=VariableType.CONTINUOUS),

"petal length (cm)": FeatureType(data_type=DataType.FLOAT, variable_type=VariableType.CONTINUOUS),

"petal width (cm)": FeatureType(data_type=DataType.FLOAT, variable_type=VariableType.CONTINUOUS)

}

Run a Data Evaluation¶

Now that the data is loaded and the data schema defined, pass the data and the schema to the ML Insights Builder component, to return the runner (workflow) component. Insights automatically decides what metrics to evaluate based on a heuristic.

runner = InsightsBuilder(). \

with_input_schema(get_input_schema()). \

with_data_frame(data_frame=iris_data_frame). \

build()

Warning

The builder might raise an exception, if mandatory components are missing or components are incorrectly set up.

With the runner object correctly built, call the run API to get the profile object, which contains all the metric output.

run_result = runner.run()

# Use Materialized View API to see the results

profile = run_result.profile

print(profile.to_pandas())

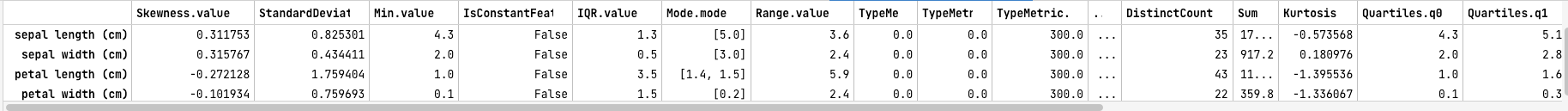

This will produce a result something like:

What the output means¶

This is a data frame representation of our profile.

The number of rows is equal to the number of features.

Each column corresponds to a specific metric associated with a feature and its values.

- Let’s take a look at the generated profile output. We have the generic iris data set here, with the following features

sepal length

sepal width

petal length

petal width

Given that no metric were asked for explicitly, Insights has automatically decided what metric should be generated for each of the features. The framework runs heuristic based on:

The data type of the feature.

The variable type of the feature.

The column type, for example, if it is an input or prediction column.

The output is shown in tabular format, since the API was used to get the dataframe representation of the profile. However, you can pass on specific post processors to return the profile data in different formats.

Note

In this example, we manually ingested the data into a dataframe and passed on the dataframe to the builder. This is one of the many ways to ingest data. The most commonly used component for ingesting data is the reader component which can directly read and process data in different formats from different storage options. See the Getting Started section for more information.

Once the metrics have been computed, execute Insights Tests/Test Suite for comprehensive validation of machine learning models and data. Please see section Test/Test Suites Component for details.